Research

The focus of our research is to understand how humans perceive, act, and collaborate in immersive environments and apply this knowledge to extended reality (XR) systems that support interaction, learning, and creative performance. A common goal is to develop scientific and engineering principles that improve user experience, accessibility, and performance in VR, AR, and MR.

Our research uses approaches from psychophysics, computational modelling, human–computer interaction, machine learning, and real-time motion analysis. The lab fosters collaboration between researchers in psychology, computer science, music technology, robotics, and the creative industries.

Funding for our work comes from:

- EPSRC

- BBSRC

- NHS

- Google Research

- Marie Skłodowska-Curie

- MSC Doctoral Networks

- Royal Society

alongside support and collaboration with industry partners such as:

- Meta / Facebook / Oculus

- Procter & Gamble

- Ansell Gloves

- Gillette

- ROLI

- Givaudan

- PartPlay

- Zubr

- Innogence

and cultural and charitable partners including:

- Museum of Music History

- Kenilworth Town Council

Main Research Areas

Fundamental Challenges in XR

This research area examines core problems in XR interaction where current technologies remain limited or where no single solution can address all use cases. Some challenges, such as realistic haptic feedback, are constrained by the physical limits of existing hardware and remain largely unsolved. Others—such as locomotion, text entry, object manipulation, and menu navigation—admit many competing solutions, each suited to specific tasks, environments, or user abilities.

Our research investigates how humans perceive and act under these constraints and develops principles that guide the design of effective interaction techniques. We study topics such as:

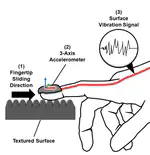

- Haptics and force feedback, where current systems cannot fully reproduce the richness of real-world touch.

- Locomotion in VR, where teleportation, joystick movement, redirected walking, and hybrid techniques offer different trade-offs in comfort, precision, and spatial awareness.

- Text entry and communication, where mid-air keyboards, gesture typing, speech, and AI-assisted input all perform differently depending on context.

- Embodiment and control, including how users adapt to virtual hands, tools, and full-body avatars.

By combining perceptual science, motion analysis, and engineering, the project defines when and why particular solutions succeed, how users adapt to system limitations, and how XR interfaces can be designed to support diverse tasks and user groups. The outcomes include conceptual frameworks, open datasets, and validated guidelines for XR interaction design used by researchers and industry partners.

Interactive Experiences and Applications

This research area investigates how immersive technologies can support performance, collaboration, and audience engagement across music, sport, and cultural heritage. The work combines motion capture, computer vision, spatial audio, real-time visualisation, and AI-driven feedback systems to create interactive environments that respond to human movement. The programme includes several strands:

- ARME and virtual music ensembles, where motion capture, audio analysis, and avatar animation create rehearsal and performance environments for musicians. Outcomes include VR and AR rehearsal environments for string quartets, interactive iPad and Vision Pro applications, and museum installations. ARME is funded by EPSRC with industrial support from PartPlay, Semantic Audio, and the Museum of Music History.

- Sport visualisation and tracking, where high-fidelity motion capture and analytics provide real-time feedback for athletic performance, training, and rehabilitation.

- Cultural and heritage visualisation, including projects such as Kenilworth Revealed, which reconstruct historical sites in augmented reality to enhance public engagement and learning.

- Interactive installations and experiences, where movement, timing, and multisensory cues are used to create responsive museum exhibits, educational applications, and public-facing immersive artworks.

Interdisciplinary Research Networking

The lab is fundamentally collaborative and interdisciplinary. It is open to anyone that would like to collaborate, has an idea and is looking for technical support, or would like to use some of the lab equipment. In this spirit, we founded BhamXR, a cross-disciplinary community of over 120 researchers at the University of Birmingham working on VR, AR, haptics, wearables, data science, performance, and creative technologies. The network promotes collaboration through seminars, workshops, research showcases, and training events. It connects students with active research groups across Psychology, Computer Science, Engineering, Medicine, and the Arts.

Translation Activities

The lab maintains a strong portfolio of industry engagement and commercialisation activities, including several spinoffs:

- ObiRobotics – Hand and body tracking technologies for robotics and XR

- MotionDynamics Ltd – motion capture and movement analytics for sport and rehabilitation

- MyJAMS Ltd – AI-enhanced virtual ensembles and immersive music practice environments

List of Current Projects

A selection of projects that are currently active in the lab.